Intellectual Property and GenAI: From Principles to Practice

By Marsya Amnee Mohammad Masri¹, Lawrence Tan² & Thila Vijayan³

¹ Executive – Strategy and Communications, Sunway iLabs

² Founder of LAWENCO | Advocates & Solicitors, Director of IP Genesis

³ Director of Learning, 42 Malaysia | Sunway FutureX DI

Where We Left Off

Previously, we covered how the law treats AI training as fair use. What’s still off-limits, though, is imitation. If generated content too closely resembles existing copyrighted material, it can still amount to infringement. In other words, training an AI model and using its output are two very different things. And for organisations using generative AI in real-world settings, that difference matters.

Precedents in the Making

While legal frameworks around AI and copyright are still evolving, some companies are choosing a more proactive route: working directly with rightsholders.

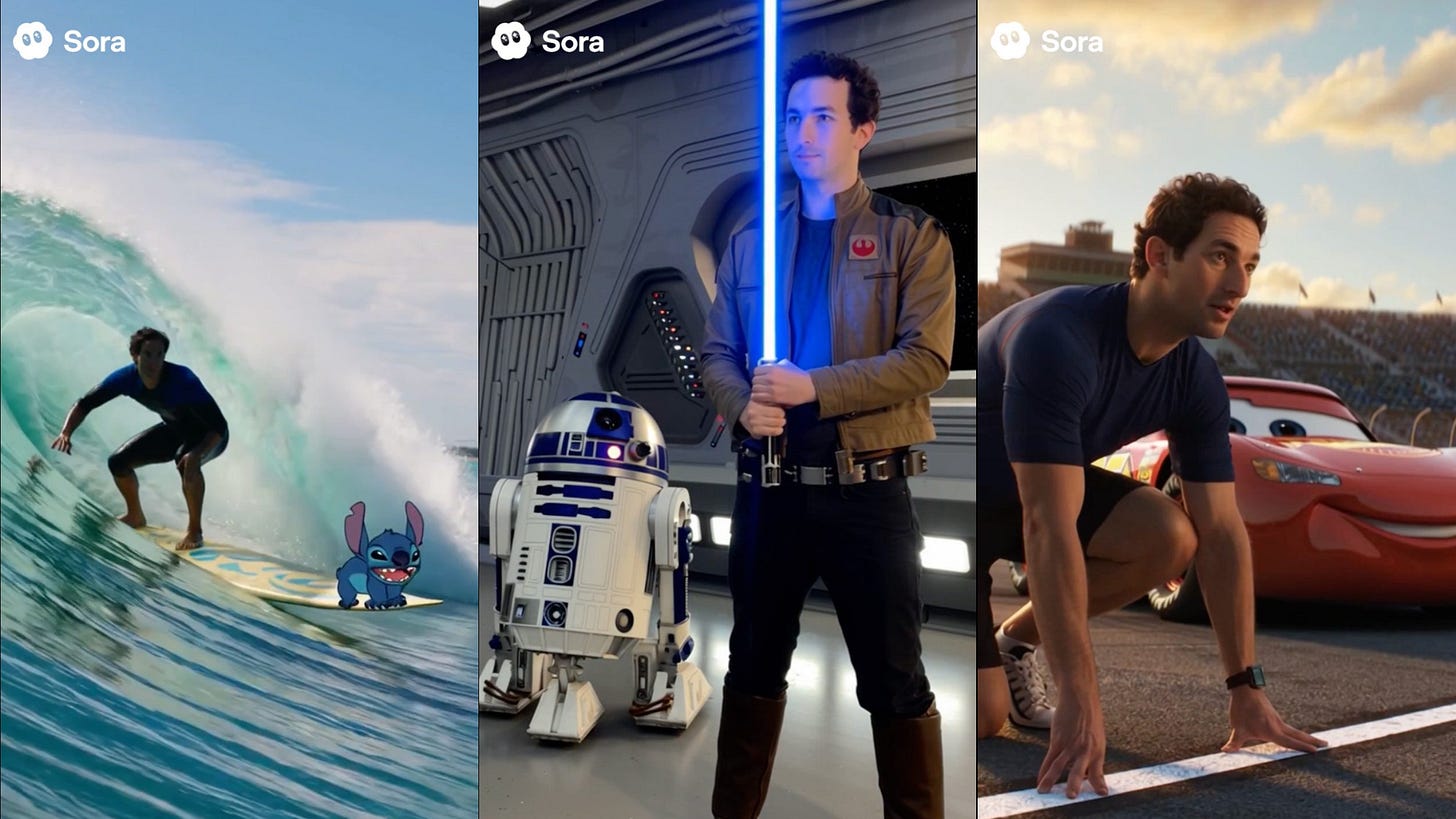

One of the most high-profile examples is the partnership between the Walt Disney Company and OpenAI, which enables OpenAI’s video platform, Sora, to generate videos featuring more than 200 characters from Disney, Pixar, Marvel, and Star Wars.

In the music industry, KLAY Vision Inc. has taken a similar approach, having signed licensing deals with major record labels including Universal Music Group, Sony Music Entertainment, and Warner Music Group, enabling AI training on authorised music rather than relying on unverified data sources.

These emerging patterns point to leading AI firms deliberately adopting responsible approaches to creative works. That, in turn, shifts the focus back to the users and companies using AI today:

How should organisations approach using AI tools responsibly in our own work?

When using generative AI, the risk often isn’t intentional copying, but somewhat accidental resemblance. Because AI outputs are based on learned patterns, what it produces can sometimes end up substantially similar to existing copyrighted works.

That distinction matters. Intentionally prompting an AI to replicate specific copyrighted materials is a conscious choice, not a technical accident, and responsibility for that choice sits with the user.

Responsible GenAI Use 101

There’s no single checklist that eliminates IP risk, but a few practical measures can make a meaningful difference and allow teams to continue using generative AI productively.

1. Originality over convenience

AI should support human creativity, not replace it. When using these tools, organisations should prioritise meaningful human input, such as creative direction, editing and judgement, to ensure outputs don’t drift too close to existing works.

This aligns with the guidance issued by the U.S Copyright Office in early 2025, which clarified that for any part of a work to be copyrightable, there must be a meaningful human authorial contribution. If a machine does most of the heavy-lifting without specific human guidance, the output remains in the public domain.

One practical approach to this is at the prompt level. Highly specific prompts that reference recognisable styles or aim to replicate a particular aesthetic increase the risk of outputs resembling protected works. Avoid asking AI to create content “in the style of” identifiable creations, such as:

“Write like [famous author]”

“Draw like [artist]”

“Make a logo like [brand]”

The goal is to create something new, not a lightly modified version of someone else’s work. Thoughtful prompting, combined with human refinement, helps keep AI-generated content on the right side of originality.

2. Rules before tools

Organisations should lay out their AI-use policies — which AI tools may be used, for what purposes, and which data can or cannot be used. One approach to this is to group AI use into three risk-based levels:

Low risks:

Everyday tasks that do not involve sensitive or proprietary information, such as brainstorming ideas, summarising non-confidential text, or drafting internal emails, can generally be considered acceptable. Organisations can mandate a simple disclosure statement for any AI-assisted work to maintain transparency.

Medium-risk:

Content intended for external use, such as marketing drafts, images, or code prototypes, should go through basic review, including plagiarism scans, reverse image searches and license or open-source code checks. These steps help ensure the output is appropriate and doesn’t closely resemble existing copyrighted materials.

High-risk:

Confidential information, trade secrets or client data should never be entered into public AI platforms without safeguards. These materials require greater control. They should only be handled in secure environments, such as internal LLMs hosted on-premises or private enterprise AI instances where data remains within the organisation’s network.

Organisations with the highest security requirements can also adopt confidential computing. This cloud technology keeps data encrypted even while the AI is actively processing it, helping protect sensitive information from both external leaks and unauthorised internal access. These guidelines keep decision-making simple, help teams work confidently with AI, and reduce the risk of accidental misuse (WIPO, 2024).

3. Know the fine print

Not all AI tools treat ownership and liability the same way. Reviewing the terms and conditions of AI platforms helps organisations make informed decisions about which tools are appropriate for their use.

Some AI platforms, like Midjourney, for instance, disclose that they may reuse user-generated content, including for purposes unrelated to AI training, and these terms can change quickly as the sector evolves.

In practice, organisations should know:

Who owns the output

How the content may be reused

What responsibilities does the AI platform cover or not

That said, because platform terms can change quickly, organisations may also consider conducting regular reviews of their AI portfolio, such as quarterly, to stay aware of any updates to data usage, ownership rights, or liability terms that could affect how AI tools are used internally or externally.

Moving Forward

As AI tools become part of everyday workflows, it’s worth noting that while AI training may be allowed, copyright rules still matter. AI can speed up creation, but it doesn’t remove the need for review, judgment, or responsibility.

For organisations, this means being mindful of how data is handled, understanding what rights platforms retain over generated content and ensuring outputs are reviewed before external use.

And for all of us, the principle is the same: use AI to support creativity, not shortcut it. Prioritising originality over imitation remains key. AI is a powerful tool, but how responsibly it’s used by companies and individuals alike is what ultimately shapes its impact.

Disclaimer: This article is for general information only and does not constitute legal advice.Acknowledgements: Thank you to the Sunway iLabs team for their invaluable contribution and insights in preparing this article.

References:

Corcoran, N. (2025). Major labels sign licensing deals with AI music company Klay. Pitchfork.

Generative AI. (2025). Authors Alliance. Guan, L. (2025, June 17). Securing Gen AI With Confidential Computing. Accenture.com; Accenture.

U.S. Copyright Office Clarifies AI Authorship Rules in Part II of Its Copyright & AI Report. (2025).